- HOW TO INSTALL SPARK FROM SOURCE CODE HOW TO

- HOW TO INSTALL SPARK FROM SOURCE CODE UPDATE

- HOW TO INSTALL SPARK FROM SOURCE CODE DOWNLOAD

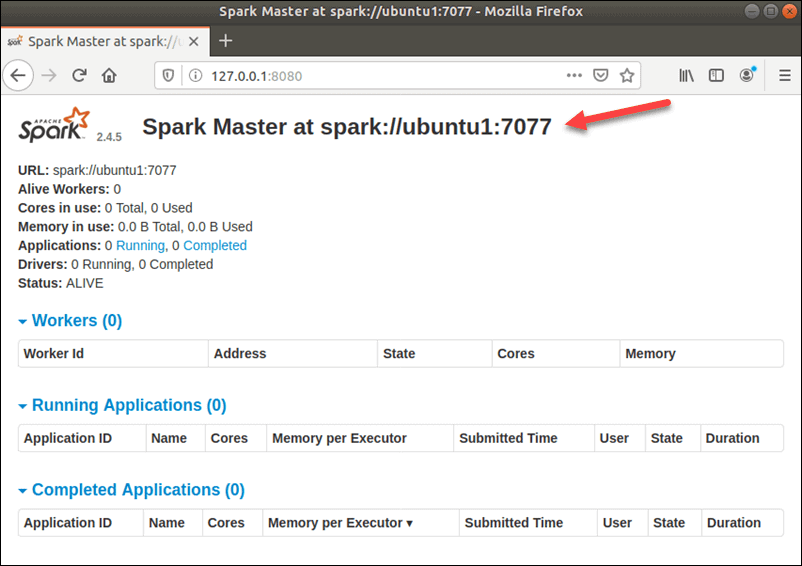

If you want to use a custom port then that is possible to use, options or arguments given below. Start Apache Spark master server on UbuntuĪs we already have configured variable environment for Spark, now let’s start its standalone master server by running its script: start-master.shĬhange Spark Master Web UI and Listen Port (optional, use only if require) This allows us to run its commands from anywhere in the terminal regardless of which directory we are in.Įcho "export SPARK_HOME=/opt/spark" > ~/.bashrcĮcho "export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin" > ~/.bashrcĮcho "export PYSPARK_PYTHON=/usr/bin/python3" > ~/.bashrcĥ. To solve this, we configure environment variables for Spark by adding its home paths to the system’s a profile/bashrc file. Now, as we have moved the file to /opt directory, to run the Spark command in the terminal we have to mention its whole path every time which is annoying. sudo mkdir /opt/spark sudo tar -xf spark*.tgz -C /opt/spark -strip-component 1Īlso, change the permission of the folder, so that Spark can write inside it. To make sure we don’t delete the extracted folder accidentally, let’s place it somewhere safe i.e /opt directory.

HOW TO INSTALL SPARK FROM SOURCE CODE DOWNLOAD

Simply copy the download link of this tool and use it with wget or directly download on your system.

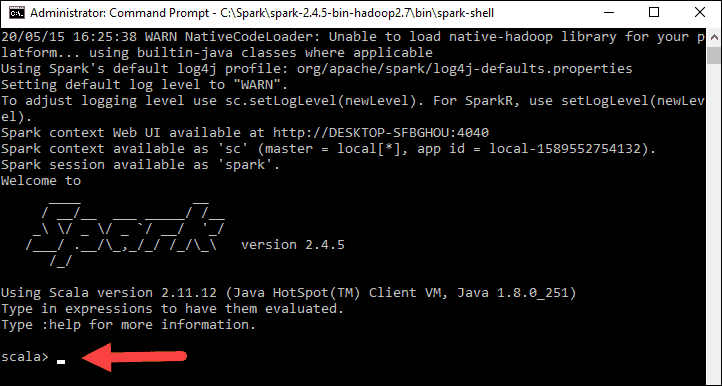

Hence, here we are downloading the same, in case it is different when you are performing the Spark installation on your Ubuntu system, go for that. However, while writing this tutorial the latest version was 3.1.2. Now, visit the Spark official website and download the latest available version of it. Here we are installing the latest available version of Jave that is the requirement of Apache Spark along with some other things – Git and Scala to extend its capabilities. The steps are given here can be used for other Ubuntu versions such as 21.04/18.04, including on Linux Mint, Debian, and similar Linux. Steps for Apache Spark Installation on Ubuntu 20.04 Access Spark Master (spark://Ubuntu:7077) – Web interface Start Apache Spark master server on Ubuntu Steps for Apache Spark Installation on Ubuntu 20.04.This open-source platform supports a variety of programming languages such as Java, Scala, Python, and R. Spark’s programming model is based on Resilient Distributed Datasets (RDD), a collection class that operates distributed in a cluster. it can store queries and data directly in the main memory of the cluster nodes.Īpache Spark is ideal for processing large amounts of data quickly. The framework offers in-memory technologies for this purpose, i.e. In addition, Spark also offers the option of controlling the data via SQL, processing it by streaming in (near) real-time, and provides its own graph database and a machine learning library. It is a framework for cluster-based calculations that competes with the classic Hadoop Map / Reduce by using the RAM available in the cluster for faster execution of jobs. Used by data engineers and data scientists to perform extremely fast data queries on large amounts of data in the terabyte range.

HOW TO INSTALL SPARK FROM SOURCE CODE HOW TO

You should see that some of the existing rows have been updated and new rows have been inserted.įor more information on these operations, see Table deletes, updates, and merges.Here we will see how to install Apache Spark on Ubuntu 20.04 or 18.04, the commands will be applicable for Linux Mint, Debian and other similar Linux systems.Īpache Spark is a general-purpose data processing tool called a data processing engine.

HOW TO INSTALL SPARK FROM SOURCE CODE UPDATE

forPath ( "/tmp/delta-table" ) // Update every even value by adding 100 to it deltaTable. Import io.delta.tables.* import .functions import DeltaTable deltaTable = DeltaTable.

0 kommentar(er)

0 kommentar(er)